Are you looking for the Answers to NPTEL Deep Learning Assignment 2? This article will help you with the answer to the National Programme on Technology Enhanced Learning (NPTEL) Course “ NPTEL Deep Learning Assignment 2 “

What is Deep Learning?

The objective of the course is to impart the knowledge and understanding of causes and effects of air pollution and their controlling mechanisms. The course will provide a deeper understanding of air pollutants, pollution inventory and modelling. The course also imparts knowledge on the impacts of air pollution on different aspects such as policy, human health and various contemporary technological innovation for betterment of air quality.

CRITERIA TO GET A CERTIFICATE

Average assignment score = 25% of the average of best 8 assignments out of the total 12 assignments given in the course.

Exam score = 75% of the proctored certification exam score out of 100

Final score = Average assignment score + Exam score

YOU WILL BE ELIGIBLE FOR A CERTIFICATE ONLY IF THE AVERAGE ASSIGNMENT SCORE >=10/25 AND EXAM SCORE >= 30/75. If one of the 2 criteria is not met, you will not get the certificate even if the Final score >= 40/100.

Below you can find the answers for NPTEL Deep Learning Assignment 2

NPTEL Deep Learning Assignment 2 Answers:-

Q1. Suppose if you are solving an n-class problem, how many discriminant function you will need for solving?

a) n-1

b) n

c) n+1

d) n-2

Answer:- a

Q2. If we choose the discriminant function gi(x) as a function of posterior probability. i.e. gi(x) = f(p(wi/x)). Then which of following cannot be the function f()?

a) f(x) = ax, where a > 1

b) f(x) = a-x, where a > 1

c) f(x) = 2x + 3

d) f(x) = exp(x)

Answer:- b

Q3. What will be the nature of decision surface when the covariance matrices of different classes are identical but otherwise arbitrary? (Given all the classes has equal class probabilities)

a) Always orthogonal to two surfaces

b) Generally not orthogonal to two surfaces

c) Bisector of the line joining two mean, but not always orthogonal to two surface.

d) Arbitrary

Answer:- c

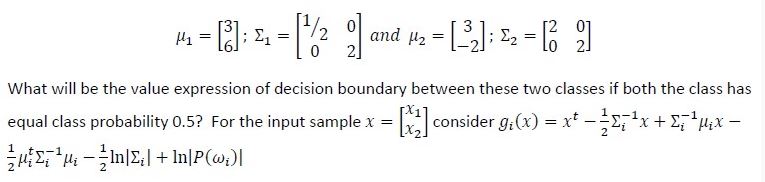

Q4. The mean and variance of all the samples of two different normally distributed class w1 and w2 are given

a) x2 = 3.514 – 1.12x1 + 0.187x12

b) x1 = 3.514 – 1.12x2 + 0.187x22

c) x1 = 0.514 – 1.12x2 + 0.187x22

d) x2 = 0.514 – 1.12x2 + 0.187x22

Answer:- c

???? Next Week Answers: Assignment 03 ????

Q5. For a two class problem, the linear discriminant function is given by g(x) = aty. What is the updating rule for finding the weight vector a. Here y is augmented feature vector.

a) Adding the sum of all augmented feature vector which are misclassified multiplied by the learning rate to the current weigh vector.

b) Subtracting the sum of all augmented feature vector which are misclassified multiplied by the learning rate from the current weigh vector

c) Adding the sum of the all augmented feature vector belonging to the positive class multiplied by the learning rate to the current weigh vector

d) Subtracting the sum of all augmented feature vector belonging to the negative class multiplied by the learning rate from the current weigh vector.

Answer:- b

Q6. For minimum distance classifier which of the following must be satisfied?

a) All the classes should have identical covariance matrix and diagonal matrix

b) All the classes should have identical covariance matrix but otherwise arbitrary

c) All the classes should have equal class probability

d) None of above

Answer:- b

Q7. Which of the following is the updating rule of gradient descent algorithm? Here ▽ is gradient operator and n is learning rate.

a) an+1 = an – n▽F(an)

b) an+1 = an + n▽F(an)

c) an+1 = an – n▽F(an-1)

d) an+1 = an + n▽F(an-1)

Answer:-a

Q8. The decision surface between two normally distributed class w1 and w2 is shown on the figure. Can you comment which of the following is true?

a) p(w1) = p(w2)

b) p(w2) > p(w1)

c) p(w1) > p(w2)

d) None of the above

Answer:- d

Q9. In k-nearest neighbour’s algorithm (k-NN), how we classify an unknown object?

a) Assigning the label which is most frequent among the k nearest training samples

b) Assigning the unknown object to the class of its nearest neighbour among training sample

c) Assigning the label which is most frequent among the all training samples except the k farthest neighbor

d) None of these

Answer:- d

Q10. What is the direction of weigh vector w.r.t. decision surface for linear classifier?

a) Parallel

b) Normal

c) At an inclination of 45

d) Arbitrary

Answer:- a

For other courses answers:- Visit

For Internship and job updates:- Visit

Disclaimer: We do not claim 100% surety of answers, these answers are based on our sole knowledge, and by posting these answers we are just trying to help students, so we urge do your assignment on your own.

if you have any suggestions then comment below or contact us at [email protected]

If you found this article Interesting and helpful, don’t forget to share it with your friends to get this information.